A brief overview of the HRA principle

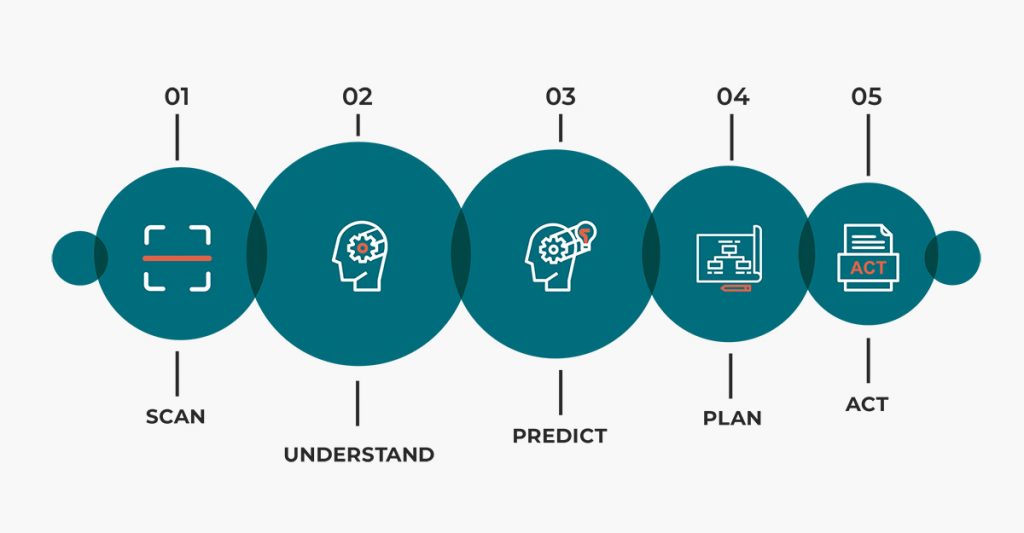

There are many, varied methods of Human Reliability Analysis, with the HSE highlighting over 72 separate tools in existence (HSE, 2009). Within safety-critical processes, the broad goal of HRA tools (and related tools such as Probability Risk Analysis) is to build-up a picture of individual task-steps that constitute a procedure, and how these steps are completed (Dougherty & Fragola, 1988; Hollnagel, 1993). HRA may be conducted to initially map a set of tasks and score the nominal error-rate, or to assess the extent to which end-user task completion complies with the authorized task procedure (Deacon, Amvotte, Khan, 2010; Flin, Mearns, Fleming, Gordon, 1996; Kjellén, 2007; Skogdalen & Vinnem, 2011). HRA Data collection typically involves elements of Task Analysis (TA) and in some models Behaviour Analysis (BA), and may consist of end-user task observation; structured interviews and self- report questionnaires (Aiwu & Hong, 2001; Bea, 1998; Gordon, 1998; Kirwan, 1990). Data gathered is used to pinpoint human error, or potential ‘weak-spot’ opportunities for human-led risk throughout the task completion hierarchy. For example, user-observation may show how a task-step was skipped, or where a correct action was undertaken but at the wrong procedural stage. Some forms of HRA treat this as an initial stage of risk mapping; probability of error-occurrence can be further generated at this stage by feeding data into appropriate prediction software (e.g. THERP; SHEAN; ASEP) (Harrison, 2004; Mannan, 2004; Silverman, 1992). Alternatively risk analysis tools may further deconstruct user-observations and questionnaire data, focusing on the influence of positive and negative user-performance shaping factors on the reliability of the task. This research data typically results in the generation of a risk predication matrix (Jo & Park, 2003; Mosneron-Dupin et al. 1997). Data output from a HRA is typically used to develop actions to minimise or mitigate human errors at the relevant place of ‘risk’ in a procedure, therefore improving the reliability of human performance (Kirwan, 1990).

Performance shaping factors

Human performance can be influenced by many different Human Factor variables, both physical and psychological. Influencers may occur before or during a task, for example, sudden ambient noise such as an alarm-test may distract a worker when he is partway through a task-process, by contrast distraction may occur prior to beginning a task, such as being given a last minute deadline for another piece of work, ‘priming’ the user with distraction. (May, Kane, Hasher, 1995; Hollands & Wickens, 1999; Wickens, 1992). Further distraction contributors are: environmental changes, current physical health, age, attitudes, emotions, state of mind, and cognitive biases. Traditional Human Factors such as distraction, situation awareness, fatigue, high cognitive load and stress can also have a significant impact on task ability (Flin, Mearns, O’Connor, Bryden, 2000; Wickens, 1992).

Communications, the missing link?

While HRA tools provide a useful framework for risk-mapping specific tasks, more focus could be placed on end-user interviews to elicit ‘real’ reasons behind factors influencing human-errors and non-compliance with authorised task procedures.

End-user non-compliance interviews: asking the right questions

Within the energy sector typical HRA data gathering focuses on quantifying human error, procedural deviations, violations and non-compliance into probability of risk occurrence (Bea, 1998; Gordon, 1998). However this approach favours a statistically driven concept of risk probability over the investigation and understanding of contextual factors, such as end-user perception of how and why an error occurred and what could be done to prevent this. To examine the case of procedural non-compliance; work processes are frequently authored by engineering or technical staff working independently from the work-site (Carillo, 2004; O’Dea & Flin, 2001).

While Human Factors experts can provide extensive input into making procedures accessible, relevant and logical to end-users, the context and positioning of the process within other tasks at the worksite can significantly impact adherence. Additionally, group and individual safety culture perceptions, as well as established organisational climate, norms, values, attitudes and behaviours have considerable impact on the success of compliance initiatives (Cooper & Sutherland, 1987; Flin, Mearns, Gordon, Fleming, 1996,1998; Sutherland & Cooper, 1991).

When a process or procedure is initially implemented on an offshore asset, this is the ideal time to conduct end-user reliability analysis (Dunnette & Hough, 1991; Gopher & Kimchi, 1989; Schraagen, Chipman, Shalin, 2000). A modified HRA model focusing on confidential and semi-structured open-ended interviews (qualitative data gathering), offers an opportunity to investigate user-perceived barriers preventing exemplar task completion. An open or loosely structured interview will allow end-users to highlight compliance-impacting issues that exist both within and outwith the confines of the task process. For example, analysis of user-interview data may highlight a critical task as scheduled at a point of traditionally low-vigilance, such as immediately prior/following a rest-break, or between two other high-demand

tasks; factors which can significantly impact available cognitive resources, fatigue and task focus (Anderson, 1990; Pashler, 1999; Styles, 2006). Capture of additional individual demands such as time-pressures, work-stress/strain and overall task-load allow for a holistic approach to building a realistic and encompassing picture of ‘real’ task demands. This approach presents a framework for developing user-centric solutions that make a significant difference to improving end-users ability to comply with procedures, leading to safer and more efficient working.

A realistic and user-centered approach to HRA

A bespoke, encompassing HRA framework should focus on examination of end-user task completion, encouraging investigation of how processes are ‘really completed’ within the work environment, as opposed to user attempts to simulate an inaccurate or outdated procedure for the benefit of ‘passing a test’. Disparity between the authorised task procedure and the ‘real-world’ process should be assessed to evaluate where procedures may be improved to benefit end-users. Investigation should focus on highlighting ‘real’ barriers to compliance, existing both within and out with the task process, pinpointing where additional end-user support is required. This can be reinforced by confidential and anonymous self-report questionnaires focusing on barriers to compliance, workplace attitudes, perceptions, norms, cultures, values, individual cognitive states, fatigue, stress/strain and situation awareness (among other information) gathered before, during and after task process completion.

In summary, a HRA framework that represents a holistic and end-user focused approach can provide valuable information on how the work environment, culture, organization and current work process may impact human error and procedural compliance in the context of task/procedure completion. Examination and discussion of highlighted procedural violations, via end-user semi-structured interviews and questionnaires allow for further probing of factors underpinning human error, and encapsulate a rational approach to realising novel solutions for ‘real’ end-user difficulties impacting human-error and non-compliance.

References

Aiwu, Z. L. H. S. H., & Hong, Y. (2001). Methods for Human Reliability Analysis. China Safety Science Journal, 3.

Anderson, J. R. (1990). Cognitive psychology and its implications. WH Freeman/Times Books/Henry Holt & Co.

Bea, R. G. (1998). Human and organization factors: engineering operating safety into offshore structures. Reliability Engineering & System Safety, 61(1), 109-126.

Bea, R. G. (2002). Human and organizational factors in reliability assessment and management of offshore structures. Risk Analysis, 22(1), 29-45.

Carrillo, P. (2004). Managing knowledge: lessons from the oil and gas sector. Construction Management and Economics, 22(6), 631-642.

Cooper, C. L., & Sutherland, V. J. (1987). Job stress, mental health, and accidents among offshore workers in the oil and gas extraction industries. Journal of Occupational and Environmental Medicine, 29(2), 119-125.

Deacon, T., Amyotte, P. R., & Khan, F. I. (2010). Human error risk analysis in offshore emergencies. Safety science, 48(6), 803-818.

Dougherty, E. M., & Fragola, J. R. (1988). Human reliability analysis.

Dunnette, M. D., & Hough, L. M. (1991). Handbook of industrial and organizational psychology, Vol. 2. Consulting Psychologists Press.

Flin, R., Mearns, K., Fleming, M., & Gordon, R. (1996). Risk perception and safety in the offshore oil and gas industry. Sheffield: HSE Books.

Flin, R., Mearns, K., Gordon, R., & Fleming, M. (1996). Risk perception by offshore workers on UK oil and gas platforms. Safety Science, 22(1), 131-145.

Flin, R., Mearns, K., Gordon, R., & Fleming, M. (1998, January). Measuring safety climate on UK offshore oil and gas installations. In SPE International Conference on Health Safety and Environment in Oil and Gas Exploration and Production. Society of Petroleum Engineers.

Flin, R., Mearns, K., O'Connor, P., & Bryden, R. (2000). Measuring safety climate: identifying the common features. Safety science, 34(1), 177-192.

Gopher, D., & Kimchi, R. (1989). Engineering psychology. Annual Review of Psychology, 40(1), 431-455.

Gordon, R. P. (1998). The contribution of human factors to accidents in the offshore oil industry. Reliability Engineering & System Safety, 61(1), 95-108.

Harrison, M. (2004). Human error analysis and reliability assessment. Workshop on Human Computer Interaction and Dependability, 46th IFIP Working Group 10.4 Meeting, Siena, Italy, July 3–7, 2004.

Hollands, J. G., & Wickens, C. D. (1999). Engineering psychology and human performance.

Hollnagel, E. (1993). Human reliability analysis: context and control (Vol. 145). London: Academic Press.

HSE (2009), Review of human reliability assessment methods, Health and Safety Executive. RR679.

Khan, F. I., Amyotte, P. R., & DiMattia, D. G. (2006). HEPI: A new tool for human error probability calculation for offshore operation. Safety science, 44(4), 313-334.

Kirwan, B. (1990). Human reliability assessment. Encyclopedia of Quantitative Risk Analysis and Assessment.

Kjellén, U. (2007). Safety in the design of offshore platforms: Integrated safety versus safety as an add-on characteristic. Safety science, 45(1), 107-127.

Jo, Y. D., & Park, K. S. (2003). Dynamic management of human error to reduce total risk. Journal of Loss Prevention in the Process Industries, 16(4), 313-321.

Mannan, S. (Ed.). (2004). Lees' Loss prevention in the process industries: Hazard identification, assessment and control. Butterworth-Heinemann.

May, C. P., Kane, M. J., & Hasher, L. (1995). Determinants of negative priming. Psychological bulletin, 118(1), 35.

Mohaghegh, Z., Kazemi, R., & Mosleh, A. (2009). Incorporating organizational factors into Probabilistic Risk Assessment (PRA) of complex socio-technical systems: A hybrid technique formalization. Reliability engineering & System safety, 94(5), 1000-1018.

Mosneron-Dupin, F., Reer, B., Heslinga, G., Sträter, O., Gerdes, V., Saliou, G., & Ullwer, W. (1997). Human-centered modeling in human reliability analysis: some trends based on case studies. Reliability Engineering & System Safety,58(3), 249-274.

Pashler, H. E. (1999). The psychology of attention. MIT press.

Rasmussen, J., Nixon, P., & Warner, F. (1990). Human error and the problem of causality in analysis of accidents [and discussion]. Philosophical Transactions of the Royal Society of London. B, Biological Sciences, 327(1241), 449-462.

Reason, J. (1990). The contribution of latent human failures to the breakdown of complex systems. Philosophical Transactions of the Royal Society of London. B, Biological Sciences, 327(1241), 475-484.

Schraagen, J. M., Chipman, S. F., & Shalin, V. L. (Eds.). (2000). Cognitive task analysis. Psychology Press.

Silverman, B. (1992). Critiquing human error: A knowledge-based human-computer collaboration approach. Academic Press.

Skogdalen, J. E., & Vinnem, J. E. (2011). Quantitative risk analysis offshore—Human and organizational factors. Reliability Engineering & System Safety,96(4), 468-479

Styles, E. (2006). The psychology of attention. Psychology Press.

Sutherland, V. J., & Cooper, C. L. (1991). Personality, stress and accident involvement in the offshore oil and gas industry. Personality and Individual Differences, 12(2), 195-204.

Wickens, C. D. (1992). Engineering psychology and human performance. HarperCollins Publishers.